What is Learning?

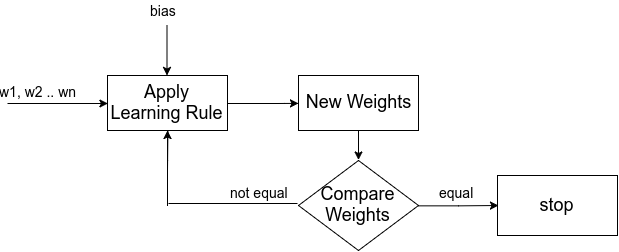

Learning rule in Neural Network means machine learning i.e. how a machine learns. It is a mathematical logic to improve the performance of Artificial Neural Network.

Learning rule is one of the most important factors to decide the accuracy of ANN.

Synapse

Dictionary meaning of synapse is togetherness, conjunction. The purpose of the synapse is to pass the signals from one neuron to target neuron. It is the basis through which neurons interact with each other.

Presynaptic and Postsynaptic Neuron

Information flow is directional. The neuron which fires the chemical called neurotransmitter is presynaptic neuron and the neuron which receives neurotransmitter is postsynaptic neuron.

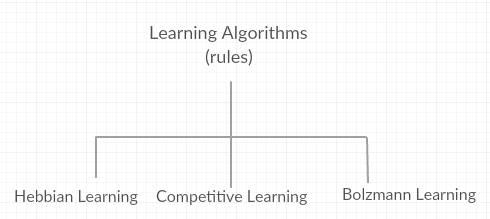

Learning Rules

Hebbian Learning

It is one of the oldest learning algorithms. A synapse(connection) between two neurons is strengthened if the neuron A on either side of the synapse is near enough to excite neuron B, and repeatedly or persistently takes part in firing it. It leads to some growth process or metabolic changes in one or both cells such that A’s efficiency as one of the cells firing B, is increased.

Generalization of Hebbian Rule

The four properties which characterize Hebbian synapse are:-

Time-dependent mechanism:- In it, modification in the Hebbian Synapse depend on the exact time of the occurrence of the presynaptic and postsynaptic activities.

Local mechanism:- Since synapse holds information-bearing signals. This locally available information is used by Hebbian synapse to produce local synaptic modification that is input specific.

Interactive mechanism:- In it change in Hebbian synapse depends upon the activity levels on both sides of the synapse(i.e. presynaptic and postsynaptic activities).

Conjunctional or correlational mechanism:- The co-occurrence of presynaptic and postsynaptic activities is sufficient to produce synaptic modification. That is why it is referred to as a conjunctional synapse. The synaptic change also depends upon the co-relation between presynaptic and postsynaptic activities due to which it is called correlational synapse.

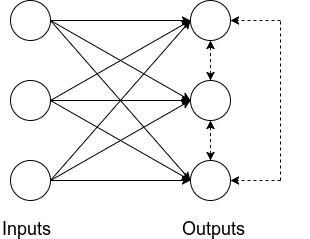

Competitive Learning

In competitive learning, the output neuron competes among themselves for being fired. Unlike Hebbian Learning, only one neuron can be active at a time. It is the form of unsupervised learning.

It plays an important role in the formation of topographic maps.

The three basic elements to a competitive learning rule:-

- A set of neurons that are same except some neurons and which therefore respond differently to the given input set.

- A limit imposed on the “strength” of each neuron.

- A mechanism that allows a neuron to compete among themselves for a given set of inputs. The winning neuron is called a winner-takes-all-neuron.

The internal activity vj of the winning neuron must be the largest among all the neurons for a specified input pattern x. The output signal vj of the winning neuron is set equal to one; the output signal of all other neurons that lose the competition is set to zero.

The change applied to synaptic weight wji is defined by

Boltzmann Learning

Boltzmann learning is statistical in nature. It is derived from the field of thermodynamics. It is similar to an error-correction learning rule. However, in Boltzmann learning, we take a difference between the probability distribution of the system instead of the direct difference between the actual value and desired output.

Boltzmann learning rule is slower than the error-correction learning rule because in it the state of each individual neuron, in addition to the system output is taken into account.

The neurons operate in a binary manner representing +1 for on state and -1 for off state. The machine is characterized by an energy function E

where si is the state of neuron and wji is the synaptic weight between neuron i and j. The fact that means that none of the neurons in the machine has self-loopback.

The probability of flipping the state of neuron j

where is the energy change.

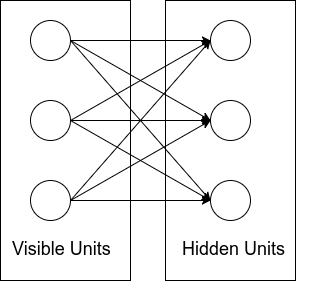

The neurons of Boltzmann machine is divided into two functional groups, visible and hidden. The visible neurons act as an interface between the network and the environment in which it operates, whereas the hidden neurons always act freely.

The Boltzmann machine works on two modes of operation:

Clamped condition:- In this, the visible neurons are clamped onto the specific states. These states are determined by the environment.

Free-running condition:- In this, all the neurons including the visible neurons and hidden neurons are allowed to operate freely.

Boltzmann Learning uses only locally available observations under two modes of operations: clamped and free running.